Saxophone and Electronics: Carl Raven

In January 2021 I was awarded an Arts Council of England grant to explore and develop saxophone improvisation with electronics, and to create a natural way of improvising music combining saxophone with electronic sound. The project lasts 10 months culminating in a concert. To help me I have enlisted John Butcher, Mauricio Pauly and Matt Wright. I have devoted 1 day a week entirely to the project.

I'm entering the project as an electronic music novice. Here is a log of my discoveries and actions each week.

15th January 2021

Day 1: I contacted John Butcher and Mauricio Pauly. They're both happy to join me on my journey. A zoom with John is organised very soon!

Today I went through the Ableton tutorials in the software, and also the max for live effects building videos. I built a simple delay and reverb. The main idea was to attach my Behringer FCB1010 to the PC via MIDI and get the foot switches to control various features in Live. After a YouTube video in programming the FCB1010 I found that MIDI notes were the best form of communication between the 2 and I managed a short 5 part looping piece with soprano sax. Still unsure whether this is the best way to go, but it's possible to work in this way.

Here's the helpful link!

https://www.youtube.com/watch?v=wO3XD_HU-tE

I also connected up the Arduino (after finding a driver for my non-branded Arduino). I connected up my breadboard, used the Arduino Max for Live connection kit and managed to control a gain control on live with the potentiometer. Thanks to Alastair Penman for his help!

Also chatted to Larry Goves about organising a concert and discussing people who could help on the journey.

22nd January 2021

Day 2: Today my aims were to try to get an output from a volume sensor from the saxophone and output to a control on another filter.

Discovered an envelope filter follows a live audio feed then draws a control which can be assigned to any other control within Ableton. Here's how I did it.

1. check monitor on the open channel is on.

2. Open envelope filter on the open audio channel. It's in the Devices-Audio Effects-Max Audio Effect folder

3. I then recorded a piano loop on an instrument channel and looped this.

4. Set the map on the envelope follower and connected this with the volume on the piano loop channel. When I played, the loop started and stopped with the activity into the mic.

I then wanted the pitch of the saxophone to be a trigger/control for something in Live. After getting stuck on Max patches which follow audio and transmit to MIDI, ( my Max8 didn't have a 'fiddle~' command. I downloaded it but am unsure how to install it into max for live.

I then bought TRIGG.ME patch which converts the mic to a MIDI note trigger. The threshold canb be set so a certain volume level triggers a pre set midi event. Could be a sample or a virtual synthesiser. Pitch recognition is still an ongoing project.

Also, I'm interested in spectral analysis of the saxophone triggering different events in Live. For another day.

In the pm I wired up my new £5 pressure sensor to the Arduino and breadboard hoping the pressure sensor could at some point be mounted on my saxophone. After finding an arduino wiring youtube video and finding a resistor, it worked a treat with the Arduino Max for Live connection kit. This could control live filters as I play. Looking forward to trying it with some live effects. Here's a video of the setup...

https://photos.app.goo.gl/cUchPnRpuZBS6oHg6

30th January 2021

A busy week this week. Had 2 initial meetings, one with John Butcher and one with Mauricio Pauly. Through Mauricio I was introduced to some new artists, John Hopkins, LeQuan Ninh, Shiva Feshareki and Lee Patterson (who also came up in conversation with John Butcher)

The name which was of big interest to me was Rodrigo Constanzo. He has invented a M4L object called C-C-Combine which looks at a bank of samples and finds the nearest timbres/pitches from a live source or a pre existing recording/sample. After discussing the possible output of my system in terms of having material to bounce from, the thought of learning to use modular synthesizers as musical output didn't seem the way to go. The idea of a bank of samples triggered by the computer system seems like a better way forward and could provide a starting point for a composition or idea to improvise. Maybe a system which listens to an improvisation and grabs ideas from that, stores them and usues them in an interactive way.

I chatted with John for an hour on Friday too. The conversation began with asking him about process, pre-thought into creating improvised pieces and how certain recordings I am drawn towards were made. John told me of his experiments with John Durrant (sound processing and electronic music composer/violinist) and his experiences with gates triggering material. (Secret Measures album) Also being inspired by an acoustic space in New York he was invited to record in. (Bell Trove Spools album). So using a space (or virtual space) in order to filter ideas towards a certain sound. We also talked about the problems of wind/electronics music where quite often the natural sounds of the instrument were too loud compared to any output from speakers.

Then we began talking about practise techniques and how pieces were devised. He said in his early days of solo performing he prepared thoroughly and knew some outlines and ideas before perfoming. "Now the concert playing is enough", but after yars of experience... Ideas would be very general, e.g. following melodic areas, making connections between multiples etc. We discussed creating practise ideas to let this enable improvisation. Also we chatted about transcribing solos learning in the traditional jazz improvisation way, but this was not something John had encountered much of. Joni Silver, Tim O'Dwyne and Bill Shoo had all transcribed John's music. Plenty of recorded material to listen to, plenty to practise.

The Arduino had been modified in code to make the pressure output curve more linear and much easier to control. Bluetoth module has been fitted but not programmed and I ordered a battery pack and an accelerometer to experiment with.

In Live I experimented with the midi triggers. Worked out 'Sampler' main functions and also how to trigger mapped samples. I triggered 3 different samples assigned to 3 MIDI notes all at different audio levels, rather like gates. Here's a quick instruction:

1. Record sample in audio channel

2. Set up MIDI channel, load up a sampler

3. Drag the sample into sampler.

4. Set start of sample at start, sustain mode to 2 arrows, release mode to 2 arrows.

5. In filter/global, add release time so the end of the sample plays even after the trigger is not active.

6. Place Trigg.me receive before sampler, send goes on the saxophone input channel. Don't forget to enable input.

I also wanted to get paallel intervals from the live saxophone channel. I experimented with 2 plugins, one was the vocoder (octaves mod patch) and the other was 'Grain Delay'. I could set the delay to be very small, but then adjust freq and pitch to obtain different intervals. It also has added random functions which were fun: RandPitch and Spray. When the arduino is workable on the saxophone I'll try to control the grain delay variables with the pressure sensors. I feel the vocoder patch is for another day - it looks pretty deep and didn't fully understand why it was outputting what it was.

It seems the journey is opening up and the target is becoming ever more uncertain, but finding out things and meeting people and talking about their ideas is really a journey I'm enjoying. Mauricio and John have been brilliant and very keen to be part of the project. Thank goodness!

5th February.

Two meetings this week, one with Rodrigo Constanzo, one with Matt Wright.

Rodrigo gave me info and links to instrument builters and modifiers who combine electronic sensors and audio sensors to trigger and control computer and sampler/synthesiser based instruments. Also to check out NIME site where there's a massive amount of info regarding new musical instruments and modified instruments.

We talked about usin the sound of the saxophone itself to control parameters in Live. R had designed a MAX patch which measured and rounded numbers taken from the intrument including pitch, volume and tone/spectral centroid (took an eq reading). These numbers were quite messy and needed heavy rounding of course.

R's MAX programming was really advanced. We talked about how he developed this and a good way to start. There is a KADENZE course which I started. I now am unsure whether my system will exist in MAX or whether it's in Live with max patches. I downloaded MAX8 to try out some of the Kadenze course. He also steered me in the way of FluCoMa website, (externals, which are Max objects written by other programmers) and the work of Hans Leeuw. Talking about external sensors, motion stuff can be split into natural gesture vs unnatural, mapping natural gestures into live objects.

Matt is a composer, improvisor and electronic musician who works with legendary saxophonist Evan Parker.

We talked about setup (permanent or ever-changing), types of patches he uses, ways of manipulating samples and creating structure using canon with sample triggering and delay. Although he does program in MAX he now tends to use Live in live performance. We talked about the compositional process being similar to the improvisation process, how time feels when improvising and also utilising a multi speaker setup with his ideas on delay.

He mentioned a few players to check out, most notably Mats Gustafsson and Shabaka Hutchins.

This week the Arts Council grant arrived (part 1) so I could then order my DPA4099 mic and also register my copy of Live! at last. Richard Lewis also wrote some Arduino code for me to add my accelerometer to the sensor project. Today I happily got the Arduino talking to Live with pressure and accelerometer sensors working. Some mini rechargeable batteries arrived along with a second pressure sensor. The system will now be 2 pressure and 1 accelerometer. The next step is sorting the bluetooth communication between the arduino and Live. Then to make it a practical object to tie to a saxophone. Here is a video of the Arduino so far, plugged into Live.

https://photos.app.goo.gl/AfSoDBuoYTZzGiCJ9

From last week, I began some transcribing of John Butcher, 'First Dart' recording. More to follow!

12th February

A very technical day today. The final push to get the saxophone controller working. Received some new code from Richard and after a large amount of experimenting, got the bluetooth module and the battery working nicely with Live. (had to revisit my GCSE physics knowledge to get resistors in series calculated from my electrinics pack!) Accelerometer, pressure sensors work wirelessly. Just some voltage regulation issues with inputs A0 and A1 to fix now, then it's for the stripboard. Ordered soldering iron, solder and Arduino nano to make the thing as small and light as possible. Circuit diagram almost drawn.

I finished the session today with more Kadenze videos from Matt Wright (a different one, from Stanford University) to learn more about Max8. Learned about a few more Max objects.

Downloaded Max8 standalone to see if my system should/could be built around this instead of Live. Still undecided. Rodrigo's Pitch/volume/timbre sensor software works with the extra Max objects (externals). Now need to work out a way to get this into Max for Live and to find out where the externals sit within Windows. It seems all this is geared up for Mac users primarily...

Also Mats Gustavson CD and EvanParker/Matt Wright CD arrived. Some inspiring stuff.

19th February

Soldering nightmare! Spent the main part of the day trying to learn how to solder. Last done in 1990 in GCSE technology... Richard also helped with some Bluetooth power issues, some soldering help and the use of arduino nanos. Bluetooth connection seemed sketchy and lost contact with Live.

To try to achieve something of any worth, I opened up Simple Onset Descriptors Max patch from Rodrigo. Managed to get the externals from FluComa website working with Windows. It wouldn't open in M4L so I emailed for help. Came right away with a great explanation and a M4L version. Now have to learn about how the M4L system handles controls for other dials and inputs using the live.remote~ and live.object~. Looks like I need to get involved with LOM (Live Object Model) inside M4L to control patches from Rodrigo's patch. Learned you can save a patch created in Max8 as a M4L object. Just needed to add an input from Live to the object.

https://www.youtube.com/watch?v=AsC9GGSypcw

This video helped from Mari Kimura. Something to look at another day.

25th February

After last weeks all-tech no music, I decided to play first to try some sounds, some Ableton packs and some new features after upgrading my Live to Live 11.

Had to spend a couple of hours sorting a .dll windows error as the Live update wouldn't run after installation. Had to reinstall 2015 C++ and uninstall/reinstall.

The DPA 4099 worked incredibly well to eliminate feedback so was able to play with the Genelecs on.

To simulate the yet to be built Arduino controller, I hooked up my FCB1010 and used the control/expression footpedals as a variable controller on PitchLoop89 plugin. I also attempted to use some of the 10 footswitches as record/playback for use in Live. It worked well enough but it was quite frustrating as the recorded material couldn't be played back at the same time as the live saxophone on the same channel through PitchLoop89. I need to look into whether this is possible. Had to learn how to use FCB1010 Manager software, create and send a systex file to the footpedals via MIDI. Hopefully I won't have to do this again. Ableton prefers note messages to control on/offs.

The freeze function in PitchLoop89 was very cool. Made an improvisation using this combined with some standard Live functionality. When making recordings of improvisations, I discovered that if wet/dry was set to 100% the arrangement window didn't record any live saxophone!

Played with DownSpiral too, a delay/pitch plugin and made a couple of improvisations using this. I still had to use the mouse/screen to do what I wanted. Having a think whether a launchpad type hardware device will be of use to avoid dragging recorded loops to spare channels.

A general thought when playing was it was easy to find interesting sounds, but to create sound when not playing was the challenge.

At the end of the day, Richard was in contact with soldering update and some further design ideas on the controller. 2 3.7v batteries seemed the way to go to avoid bluetooth dropout.

4th March

Today was a mixture of programming and experimentation with sounds.

I used Rodrigo's Simple Onset Descriptors patch and tried to get a numerial output wich numbered the notes of the Alto. The idea was to then use a software sampler, turn the numerical output to a midi message and trigger the sampler from pitches on the saxophone. Still working on how to het the midi output into a max or ableton instrument.

My next project was to assign the volume output from Rodrigo's patch to a controller inside an audio plugin. I made a patch which reads a selected dial in the audio plugin (or system dial, fader etc.) It took a good while for me to work out how to divide the output, an integer by 100 to 3 dp without max rounding to the nearest whole number. (answer: divide by 100.000).

https://www.youtube.com/watch?v=AsC9GGSypcw

Thanks to Mari Kimura for this video. I made a button in Rodrigo's patch which assigns a plug in dial to be controlled by the volume. It was fun to link the volume of the saxophone to a pitch dial inside PitchLoop89. Hoping to add to this to make it more useable, and to add other controls assignable from the other 2 parameters.

The next plan was to try out Looper, another Ableton plug in. I was attempting to replace the looper plug in so I didn't have to control the main functions of Ableton (recording my live recorded samples into the boxes on the main screen). Assigning foot switches to the functions of looper was straight forward, but it was hard to replicate the flexibility of the ableton record playback system. I'm still frustrated by the way you cannot keep one channel open, record on it, then play back at the same time as performing through the same audio plugins. Moving the sample across seems to be ythe only option but impossible whilst playing saxophone!

Listening wise, I listened more to Mats Gustafson. My biggest worry is creating sound convincingly whilst not playing. And being in control of structure whilst playing. I still can't decide whether an ableton controller (like push) will be necessary. Using footswtches is terribly awkward but controller is hand control only. Mats uses drones and changing textures really well. I'd like to explore controlling these types of sound using the Arduino controller.

Which brings me to the Arduino. All soldered and working with 2 batteries. Richard Lewis HUGELY helpful. A brilliant and friendly guy. Next week I'll try to mount this onto the alto and find a way to callibrate the accelerometer and to mount the pressure sensors without trashing my alto!

11th March

Today started slowly. My plan was to do 2 things: to get a MIDI output from raw data coming in from the saxophone, and to mount the arduino, map the pressure sensors and the accelerometer to some variables in an audio effects processor.

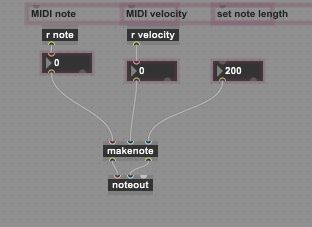

Before lunch I tried to work out in Max the way to transmit a piece of data into a note on message, then to send this note on into a Live instrument. The input variables were pitch from the microphone and the volume from the microphone. The idea was to translate pitch in Hz to a MIDI note and the volume to a 0-127 velocity variable.

Comments

Post a Comment